Home / Keptn v1 Docs / Release 1.y.z / Concepts / Architecture

Architecture

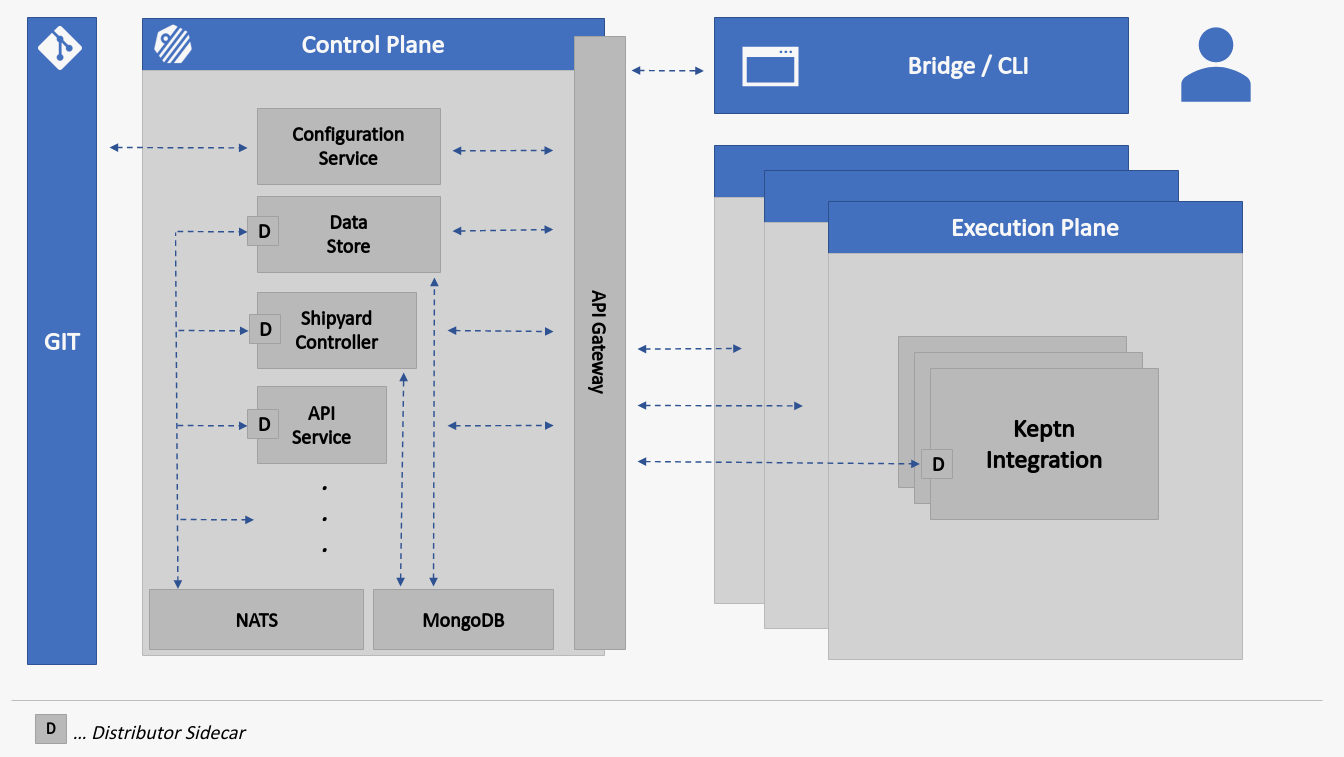

Keptn is an event-based control plane for continuous delivery and automated operations that runs on Kubernetes. Keptn itself follows an event-driven approach with the benefits of loosely coupled components and a flexible design that allows easily integrating other components and services. The events that Keptn understands are specified here and follow the CloudEvents specification v2.

This event-driven architecture means that Keptn is tool and vendor agnostic. See Keptn and other tools for a fuller discussion.

NATS

During Keptn installation, NATS is installed into the Kubernetes namespace where the Keptn Control Plane is installed. NATS is used to communicate with the Execution Plane as discussed below.

Keptn CLI

Use the Keptn CLI to send commands

that interact with the Keptn API.

It must be installed

on the local machine and is used to send commands to Keptn.

To communicate with Keptn, you need to know the API token (keptn-api-token),

which is created during the installation via Helm and verified by the api component.

Keptn Bridge

The Keptn Bridge is a user interface that can be used to view and manage Keptn projects and services.

See Keptn Bridge, for information about how to access and use the Keptn Bridge.

Keptn Control Plane

The Keptn Control Plane runs the basic components that are required to run Keptn and to manage projects, stages, and services. This includes handling events and providing integration points. It orchestrates the task sequences that are defined in the shipyard but does not actively execute the tasks.

api-gateway-nginx

The api-gateway-nginx component is the single point used for exposing Keptn to the outside world. It supports the four access options that Kubernetes supports: LoadBalancer, NodePort, Ingress, and Port-Forward.

It also redirects incoming requests to the appropriate internal Keptn endpoints – api, bridge, or resource-service.

api-service

The Keptn API provides a REST API that allows you to communicate with Keptn. It provides endpoints to authenticate, get metadata about the Keptn installation within the cluster, forward CloudEvents to the NATS cluster, and trigger evaluations for a service.

mongodb-datastore

The mongodb-datastore stores event data in a MongoDB that, by default, is deployed in your Keptn namespace. You can instead use an externally hosted MongoDB by configuring the connectionString fields in the values.yaml file. See Install Keptn with externally hosted MongoDB for more information.

The service provides the REST endpoint /events to query events.

The mongodb-datastore and shipyard-controller pods

have direct connections to mongodb (keptn-mongo).

resource-service

The resource-service is a Keptn core component

that manages resources for Keptn project-related entities, i.e., project, stage, and service.

This replaces the configuration-service that was used in Keptn releases before 0.16.x.

It uses the Git-based upstream repository

to store the resources with version control.

This service can upload the Git repository to any Git-based service

such as GitLab, GitHub, and Bitbucket.

The resource-service hosts this file in an emptyDir volume

so that a new pod of the service always starts with an empty volume.

Note that, in earlier releases, this file was mounted as a Persistent Volume Claim (PVC).

shipyard-controller

The shipyard-controller manages all Keptn-related entities, such as projects, stages and services,

and provides an HTTP API that is used to perform CRUD operations on them.

This service also controls the execution of task sequences

that are defined in the project’s shipyard

by sending out .triggered events whenever a task within a task sequence should be executed.

It then listens for incoming .started and .finished events

and uses them to proceed with the task sequence.

Execution Plane Services

The Keptn Execution Plane hosts the Keptn-services that integrate the tools that are used to process the tasks. The Keptn cluster can contain a single Execution Plane that is installed either in the same Kubernetes cluster as the Control Plane or in a different Kubernetes cluster.. You can also configure multiple Execution Planes on multiple Kubernetes clusters.

Keptn-services react to .triggered Keptn events that are sent by the shipyard controller.

They perform continuous delivery tasks like deploying or promoting a service

and orchestrational tasks for automating operations.

Those services can be plugged into a task sequence

to extend the delivery pipeline or to further automate operations.

Execution plane services subscribe to events using one of the following mechanisms:

-

distributor sidecar that forwards incoming

.triggeredevents to execution plane services. These distributor sidecars can also be used to send.startedand.finishedevents back to the Keptn control plane. This was the original Keptn mechanism for sending events to services. -

cp-connector (Control Plane Connector) uses Go code to handle the logic of an integration connecting back to the control plane. This mechanism was introduced in Release 0.15.x and is used by all core Keptn services. The distributor pod is not required, but it requires more coding in each service.

-

go-sdk – Provides a wrapper that adds features around the cd-connector. All newer services and most Keptn internal services use

go-sdk.

The default Keptn installation includes Keptn-services for some Execution Plane services,including:

-

lighthouse-service: conducts a quality evaluation based on configured SLOs/SLIs.

-

approval-service: implements the automatic quality gate in each stage where the approval strategy has been set to

automatic. In other words, it sends anapproval.finishedevent which contains information about whether a task sequence (such as the artifact delivery) should continue or not. If the approval strategy within a stage has been set tomanual, theapproval-servicedoes not respond with any event since, in that case, the user is responsible for sending anapproval.finishedevent (using either the Keptn Bridge or the API). -

remediation-service: determines the action to be performed in remediation workflows.

-

mongodb-datastore: stores MongoDB event data that is deployed in the cluster as discussed above.

You also need a service to create/modify the configuration of a service that is going to be onboarded, fetch configuration files from the configuration-service, and apply the configurations. In older Keptn releases, the helm-service was included in the default Keptn distribution for this purpose and it is still the most popular solution.

Any of these services can be replaced by a service for another tool that reacts to and sends the same signals. See Keptn and other tools for more information.

Execution plane services can be operated within the same cluster as the Keptn Control Plane or in a different Kubernetes cluster. In either case, NATS is installed into the Kubernetes namespace where the Keptn Control Plane is installed to communicate with the Execution Plane.

-

When the Control Plane and Execution Plane are operated within the same cluster, the services can directly access the HTTP APIs provided by the control plane services, without having to authenticate. In this case, the distributor sidecars or

cp-connectorsdirectly connect themselves to the NATS cluster to subscribe to topics and send back events. -

When an execution plane is operated outside of the Cluster, it can communicate with the HTTP API exposed by the

api-gateway-nginx. In this case, each request to the API must be authenticated usingkeptn-api-token. The distributor sidecars and cp-connectors are not able to directly connect to the NATS cluster, but they can be configured to fetch open.triggeredevents from the HTTP API.

See Integrations for links to Keptn-service integrations that are available. Use the information in Custom Integrations to create a Keptn-service that integrates other tools.

NATS behavior on a single-cluster instance

On a single-cluster Keptn instance, the Keptn control plane and execution plane are both installed on the same cluster and they communicate using NATS. Execution plane service pods have a distributor container that subscribes to and publishes events on behalf of the execution plane service.

Environment variables documented

on the distributor reference page

control how the distributor behaves,

including setting the PUBSUB_URL environment variable that the distributor uses to locate the NATS cluster.

The flow can be summarized as follows.

Note that this discussion assumes using helm-service and tasks like deployment

but other services could be used for this processing

and any tool could listen for tasks with names other than those of the standard tasks

that are documented on the shipyard reference page.

-

The distributor for the execution plane services on a control plane handles the subscriptions and publishes operations for the execution plane service by subscribing to NATS subjects that Keptn creates dynamically.

For sequence-level events:

sh.keptn.event.<stage>.<sequence>.<verb>For example, the control plane might publish the following to the subject:

sh.kept.event.dev.delivery.triggered sh.keptn.event.deployment.triggeredFor task-level events:

sh.keptn.event.<task>.<verb>Optionally, the control plane can send one or more events in the form:

sh.keptn.<task>.status.changedThis is useful to signal status updates during long-running tasks.

-

The Helm-Service Distributor (HSD) subscribes to the subject:

sh.keptn.event.deployment.triggeredAnd receives:

sh.keptn.event.deployment.triggeredas well as the JSON event body.

-

HSD triggers the helm-service and publishes to the subject:

sh.keptn.event.deployment.startedas well as the JSON event body.

-

The helm-service finishes the deployment and the HSD publishes to the subject:

sh.keptn.event.deployment.finishedas well as the JSON event body.

NATS behavior on a multi-cluster instance

In a multi-cluster configuration,

an execution plane is a namespace or cluster other than where the control plane runs

that runs a Keptn service.

The distributor was originally designed to work for both the control plane and the remote execution planes

but, for recent releases, most execution plane services use go-sdk rather than the distributor.

Services that use go-sdk on the execution plane communicate over NATS

but the execution plane distributor polls the NATS subjects using the Keptn API.

It polls the NATS subjects using HTTPS (polling the /api/v1/event endpoint) on the control plane.

The following Keptn core services use go-sdk and are connected to NATS

using the NATS environment variable NATS_URL to determine the URL:

shipyard-controller, remediation-service, mongodb-datastore,

lighthouse-service, and approval-service.

By default, the value of the NATS_URL environment variable

is the same as the value of the distributor’s PUBSUB_URL environment variable.

Keptn does not currently support a ConfigMap that contains the NATS_URL

so it must be set as an environment variable in the Helm chart for each configured service.

The helm-service and Job Executor Service (JES) use the distributor’s API proxy feature

to communicate with the resource-service so any configuration changes must be applied

to the distributor and not the helm-service or JES.

How events are handled

Keptn uses NATS

Jetstream

to propagate events.

This means that, after an event has been successfully sent to the Keptn API,

it is persisted by NATS Jetstream.

The shipyard-controller coordinates the sequence executions.

If the shipyard-controller is down when the event is sent,

it can pick it up from the event stream when it is up and running again

so that any events sent to the API while it was down are not lost.

The API client must ensure that the event has been successfully sent to the API. So, if the keptn API endpoint for ingesting events is down for some reason, it can not receive events during this time and integrations/tools must re-send those events.

See also

- Architecture: Learn about the architecture, how Keptn works internally, and can be extended.

- Automated Operations: Learn about the core use-case of automated operations.

- Automated Operations: Learn about the core use-case of automated operations.

- Continuous Performance Verification: Learn about continuous performance verification with Keptn.

- Continuous Performance Verification: Learn about continuous performance verification with Keptn.

- Declarative Multi-Stage Delivery: Learn about the core use-case of declarative multi-stage delivery.